Engineering the Illusion of Space in 3D Audio: Signal Science of Spatial Audio & Psychoacoustics

“We treat the human ear like an analog sensor and the brain as the DSP. Our job is to feed it the right illusion.”

— Misha Krunic, Head of Acoustic Systems, Harman International

Welcome to the Theatre of the Mind

How does it happen that a pair of earbuds no larger than your thumb can create the illusion of an orchestra in your living room or, worse, make your boss’s voice sound as if it’s whispering in your left ear on Zoom? Ofcourse, it can’t be sorcery. Or engineering is the real sorcery here. Sleek, DSP-driven, psychoacoustically-inspired engineering.

Spatial audio is the latest flex in the audio universe. But under the hype, it’s a lovely mix of maths, brain science, and signal trickery that messes with sound to fool your sense of space. So, if you’re an audio engineer, a developer, or just a sound tech nerd, this one’s for you.

“The beauty of psychoacoustics is that it lets you hack physics with perception. It’s cheaper to trick the brain than build more speakers.”

— Elaine Tsai, Lead DSP Architect, Sonos

What Is Spatial Audio, Really?

Here’s the thing: spatial audio is less of a product and more of a toolkit. It’s the science (and sometimes black magic) of tricking your ears and brain into thinking sound is coming from all around you.

Depending on the system, it can involve:

- Upmixing: Translating flat stereo into a 360° soundscape.

- Psychoacoustics: Exploiting how your brain interprets direction and distance.

- Beamforming: Shaping sound like a laser, steering it to specific zones.

- Crosstalk Cancellation: Preventing your left ear from eavesdropping on your right.

It’s the acoustic equivalent of VR. Here, you’re dealing with a matrix of audio devices, microphones, and real-time DSP.

“The human brain can detect interaural time differences as small as 10 microseconds — more precise than most GPS systems.”

(Journal of the Acoustical Society of America)

Psychoacoustics: Fooling the Smartest Thing in the Room

Currently our brain’s a bit behind tech, when it comes to the direction of sound. Spatial audio takes advantage of that.

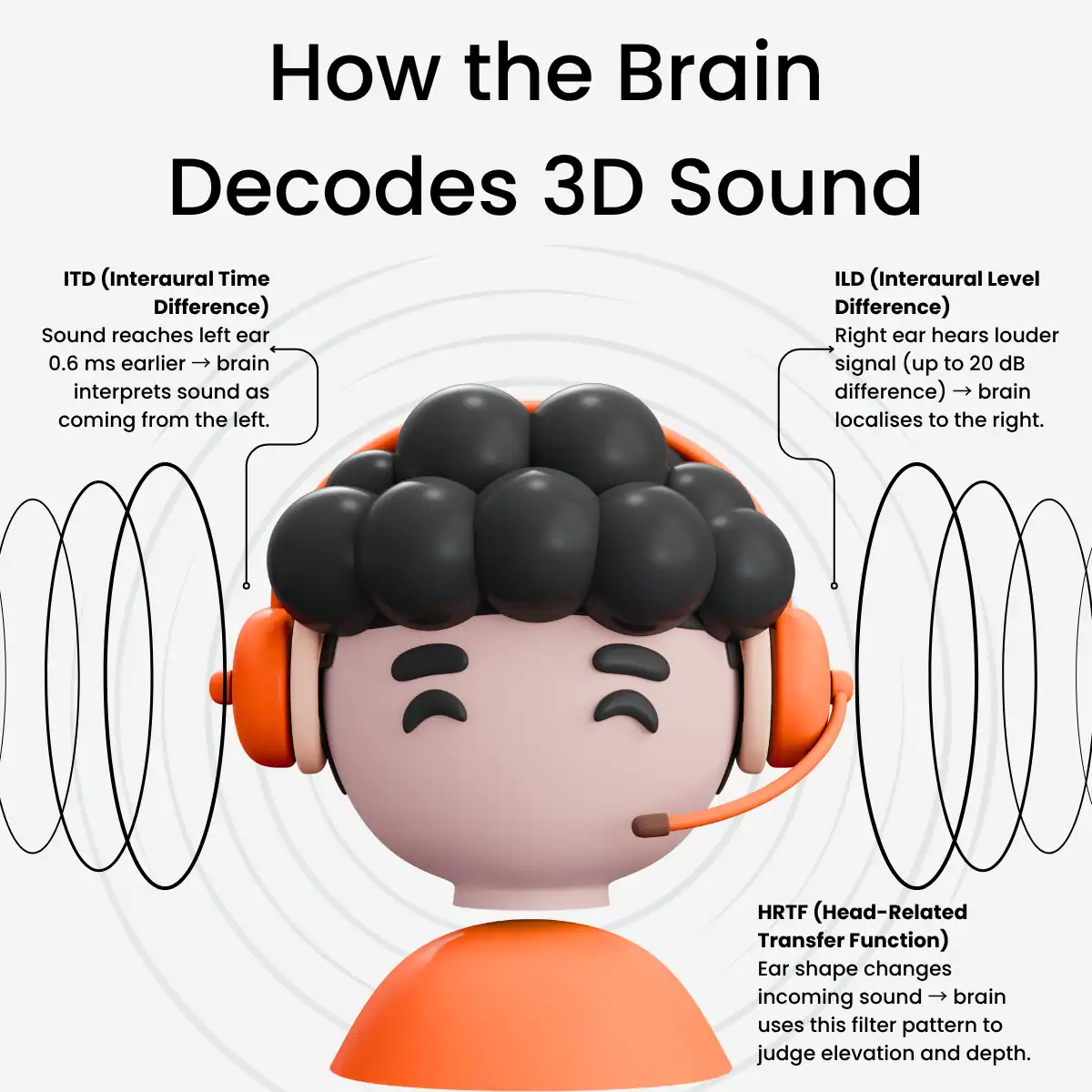

Your audio system employs three major tricks:

- ITD (Interaural Time Difference) – which ear gets the sound first.

- ILD (Interaural Level Difference) – which one hears it louder.

- HRTFs (Head-Related Transfer Functions) – how your strangely-shaped ears and head deform sound prior to its arrival at your brain.

With some skillfully manipulated signals, engineers can make you perceive a helicopter hovering above. When there is no helicopter anywhere.

Here’s an example of real-life flex. Apple’s AirPods Pro with Spatial Audio. They’re monitoring your head in real-time, rendering audio so that it’s “anchored” in space. You move your head, the audio stays put. That’s HRTFs, gyros, and DSP working overtime for the illusion.

“Over 70% of perceived spatial sound is shaped not by the speakers themselves, but by the listener’s own head-related transfer functions (HRTFs).”

Beamforming: Precision-Guided Audio Missiles

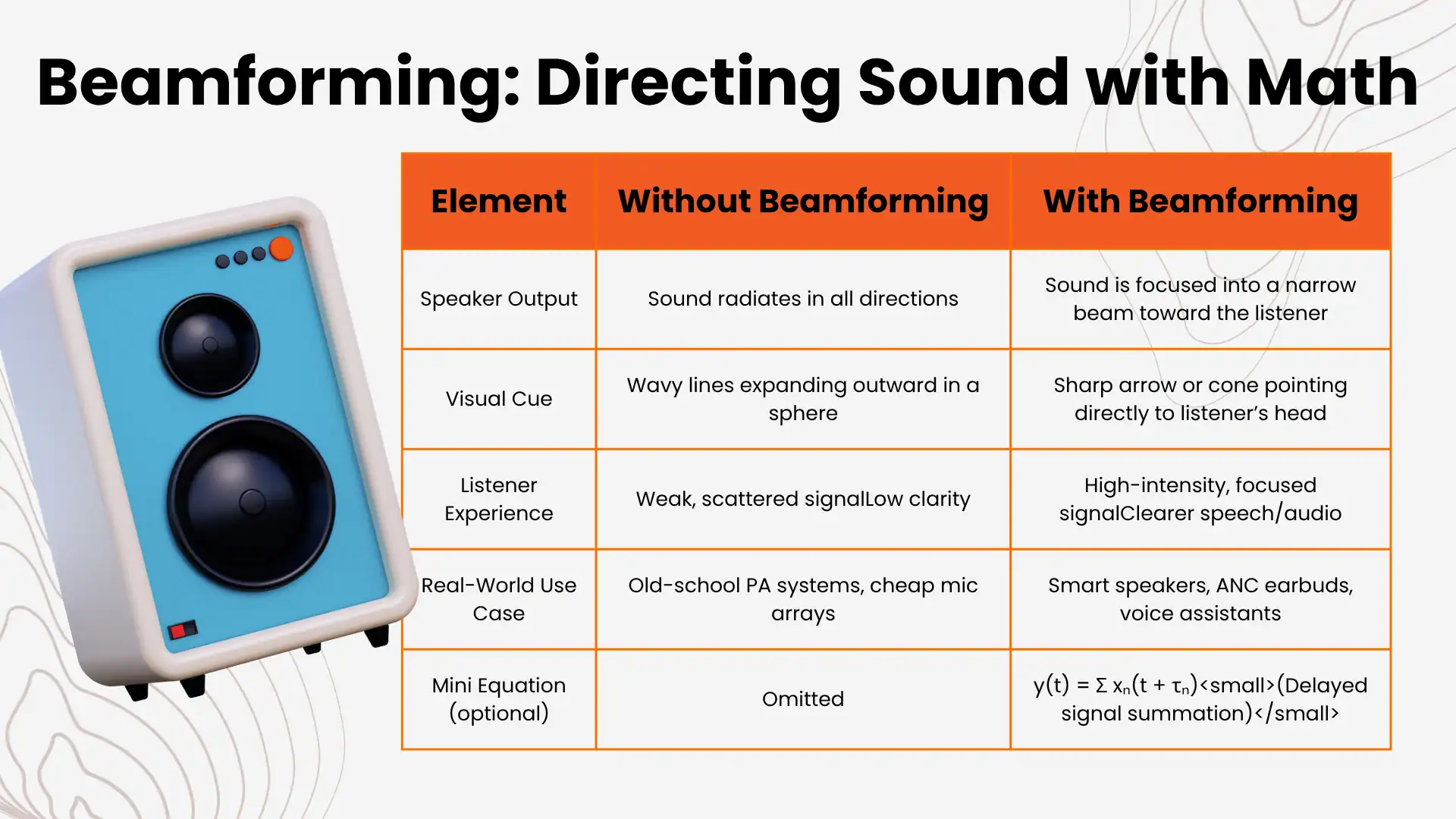

Beamforming happens when you give sound a sense of direction. Instead of sending audio everywhere, multiple audio or mic elements work together delaying, amplifying, and shaping the wavefront to create beams.

It’s how smart speakers “find” your voice in a noisy kitchen, and how earbuds are able to discern your mumble and filter out the blender.

Use Cases:

- Voice assistants: Beam your command, ignore the chaos.

- Earbuds: Clean speech pickup, not much ambient junk.

- Smart speakers: Bounce sound off walls to generate faux surround.

Under the hood, it is all phased array math and constructive interference. But to the listener, it simply sounds cleaner and smarter.

“Beamforming in smart speaker arrays can improve far-field voice pickup accuracy by up to 40% in noisy environments.”

(Dolby Labs engineering whitepaper)

Upmixing: Turning Pancake Audio into Layer Cake

Here’s a dirty little secret: most audio we consume is still stereo. But that is no reason not to fake a full surround rig.

Upmixing takes boring Stereo Sound and expands it into multi-speaker or multi-driver surround using algorithms, spectral magic, and object repositioning.

Examples:

- Ambisonics: for immersive spheres of sound.

- Spectral envelope expansion: for widened perception.

- Object-based rendering: for precise instrument placement.

Sony’s 360 Reality Audio depends on these magic tricks to place vocals and instruments around your head. Harman’s in-car systems, including Logic 7 and QLS, employ upmixing to place violins in the back seat and vocals in front, all without ever laying a hand on the original mix.

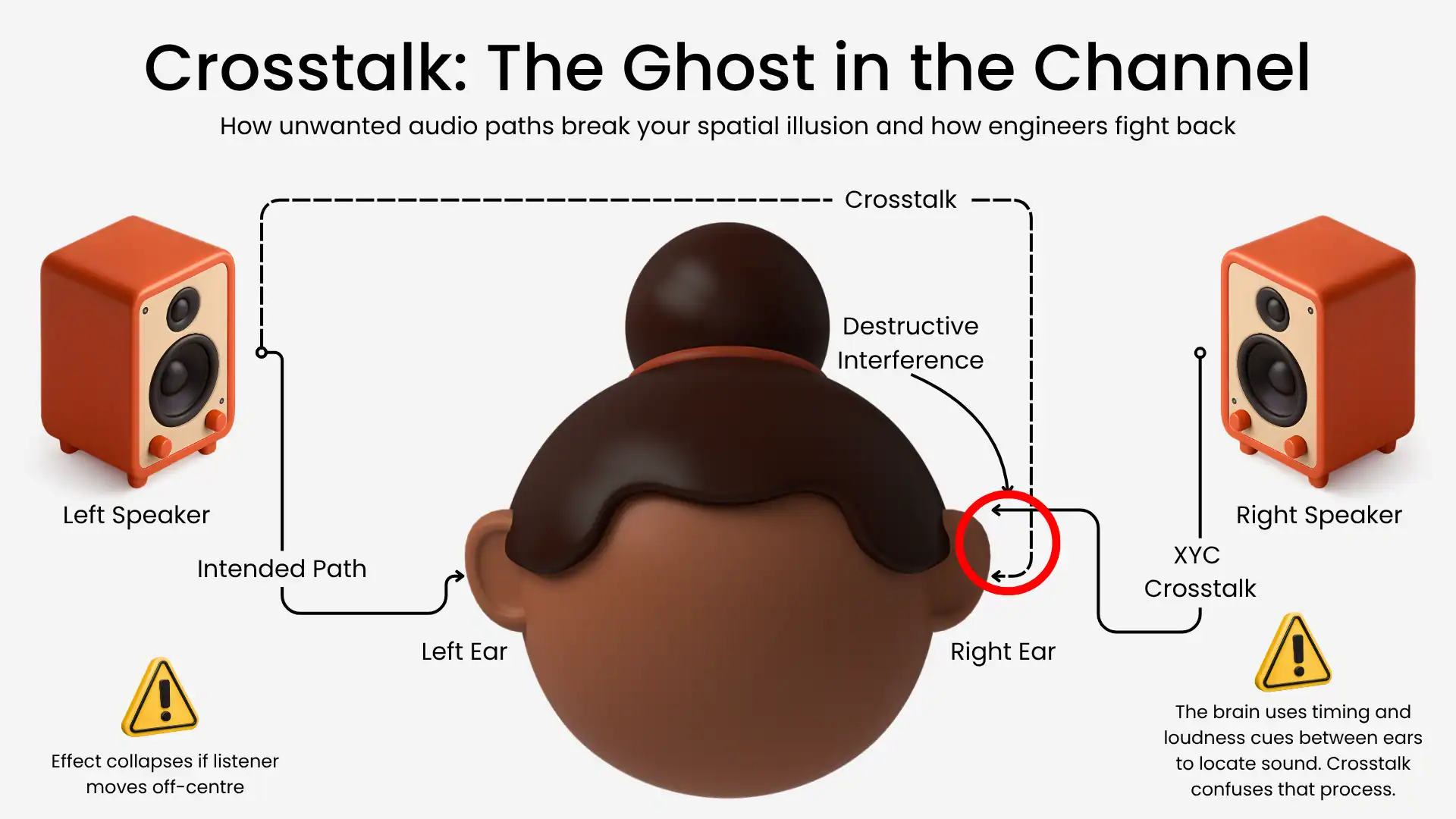

Crosstalk Cancellation: The Control of the Chaos

Spatial audio from two speakers would fall apart sooner than a bargain-basement tripod without crosstalk cancellation.Your ears are not alone. What hits the left speaker leaks into your right ear. That’s crosstalk and it’s the nemesis of good spatial simulation.

XTC (Crosstalk Cancellation) solves this by shooting out a precisely inverted signal that neutralizes the bleed. Like a sound force field.It does have its limits, however:

It’s fragile. Shift your head, the effect falls apart.

It’s location-limited, great in sweet spots, unreliable elsewhere.However, when the subject is gaming headsets and virtual surround soundbars, it’s the behind-the-scenes MVP.

“XTC systems lose over 80% of effectiveness if the listener moves 15° off-axis.”

(Yamaha Acoustic Research Division)

Real Products That Make the Spatial Grade

Enough theory. Time to look at gear that actually walks the talk:

- Echo Studio: Spatial audio engine + upward drivers = reflective bounce-back sound.

- AirPods Pro (2nd Gen): Head tracking in real time, personalized HRTFs, all-day illusion alongside Apple Music.

- Harman Car Systems: Logic 7 & QLS distribute instruments around the cabin like a DJ with a PhD.

- Sonos Arc: Dolby Atmos soundbar with side and vertical-firing drivers. It throws sound around like it owes you money.

Each one blends psychoacoustics, beamforming, and processing smarts into an effortless, immersive experience.

The Engineering Reality Check

No point pretending it’s easy. Spatial audio design has trade-offs that keep engineers up at night:

- Latency : Get it under 20ms or listeners will begin to notice.

- Battery drain: Beamforming and HRTFs are hungry.

Room interference: Your perfectly shaped beam meets a sofa. Good luck. - Miniaturisation: Try to fit this tech into a TWS bud. Go on, try.

It’s a design miracle any of this works as well as it does.

“By 2026, over 80% of wireless earbuds shipped will support some form of spatial audio rendering.”

(Counterpoint Research, Audio Trends Report)

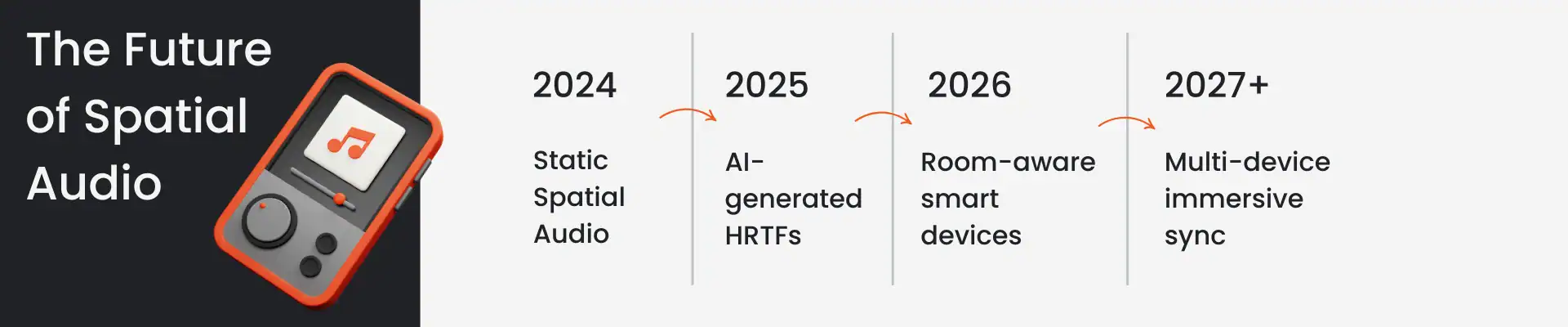

The Not-So-Distant Future

Here’s where it’s all headed:

- AI-tuned HRTFs: Scan your ear, get a perfect spatial profile. No more one-size-fits-none.

- Room-aware beam steering: Devices that scan the room and adapt in real time.

- Edge AI upmixing: Smart, real-time remixing without cloud dependence.

- Multi-device spatial syncing: Your TV, lamp, and toaster all coordinate to create a surround field. (Okay, maybe not the toaster.)

We’re heading toward adaptive audio that shapes itself around your space, your ears, and your movem

Wrapping It Up: Engineering the Unbelievable

Spatial audio is believable because it is engineering that is meant to trick perception and do it so well that we refer to it as magic.

We’re the stagehands of the illusion as engineers. Behind every “wow” moment is a stack of transfer functions, filter coefficients, and beam angle adjustments.

So the next time somebody says, “This sounds like I’m there,” tip your hat. That illusion? You created it.

Explore more related content

Digital Product Passports for Small Appliances & Air Purifiers

Digital Product Passports for Small Appliances & Air Purifiers: A 2025–2027 Manufacturer Playbook From the Oxera...

Active Sound Design in EVs: How Engineers Craft the Perfect Electric Engine Roar

Active Sound Design in EVs: How Engineers Craft the Perfect Electric Engine RoarThe global electric vehicle sound...

Poor PCB Design, Poor Gameplay: EMI and SI in Gaming Hardware

Poor PCB Design, Poor Gameplay: EMI and SI in Gaming HardwareFixing a signal integrity issue during schematic design...