AI Hardware: The Next Frontier for Smart Manufacturing

2025 has been a headline year for AI — not just in software, but in global economy.

AI doesn’t run on wishful thinking. It runs on silicon. And the global race to dominate AI is creating a hardware crisis hiding in plain sight.

While the spotlight shines on algorithms, smart manufacturing is quietly becoming the backbone of the AI revolution.

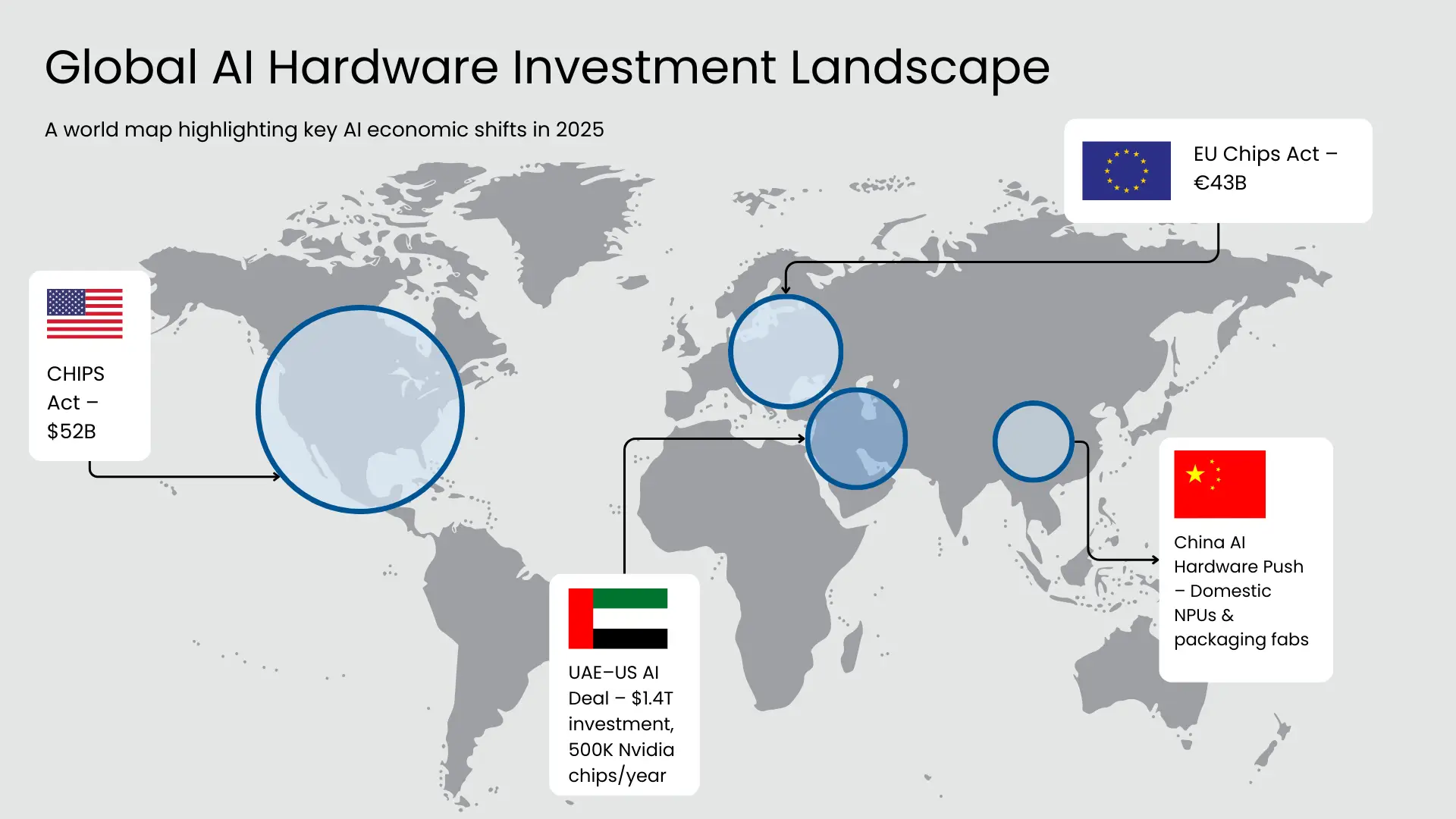

Governments Are Pouring Money Into Chips

In May, the UAE signed a $1.4 trillion AI investment agreement with the US, a move that includes importing over 500,000 Nvidia AI chips annually. These aren’t basic components — they’re some of the most powerful processors ever made, destined for data centres, national infrastructure, and industry AI systems.

And they’re not alone:

- The U.S. CHIPS Act has already unlocked $52 billion in funding for domestic semiconductor capacity, including backend assembly and advanced packaging.

- The EU Chips Joint Undertaking is backing €43 billion+ in regional chip manufacturing, from German fabs to Hungarian test centres.

- China, meanwhile, is accelerating its own semiconductor sovereignty with a sharp focus on homegrown AI silicon and chiplet-level integration.

Each of these efforts has something in common: they all rely on complex, high-yield manufacturing capabilities to make AI hardware viable at scale.

IEEE data shows AI chips can draw 400–700W under sustained load — this creates non-trivial implications for packaging, cooling, and system-level testing.

AI Hardware Demands a Different Build Strategy

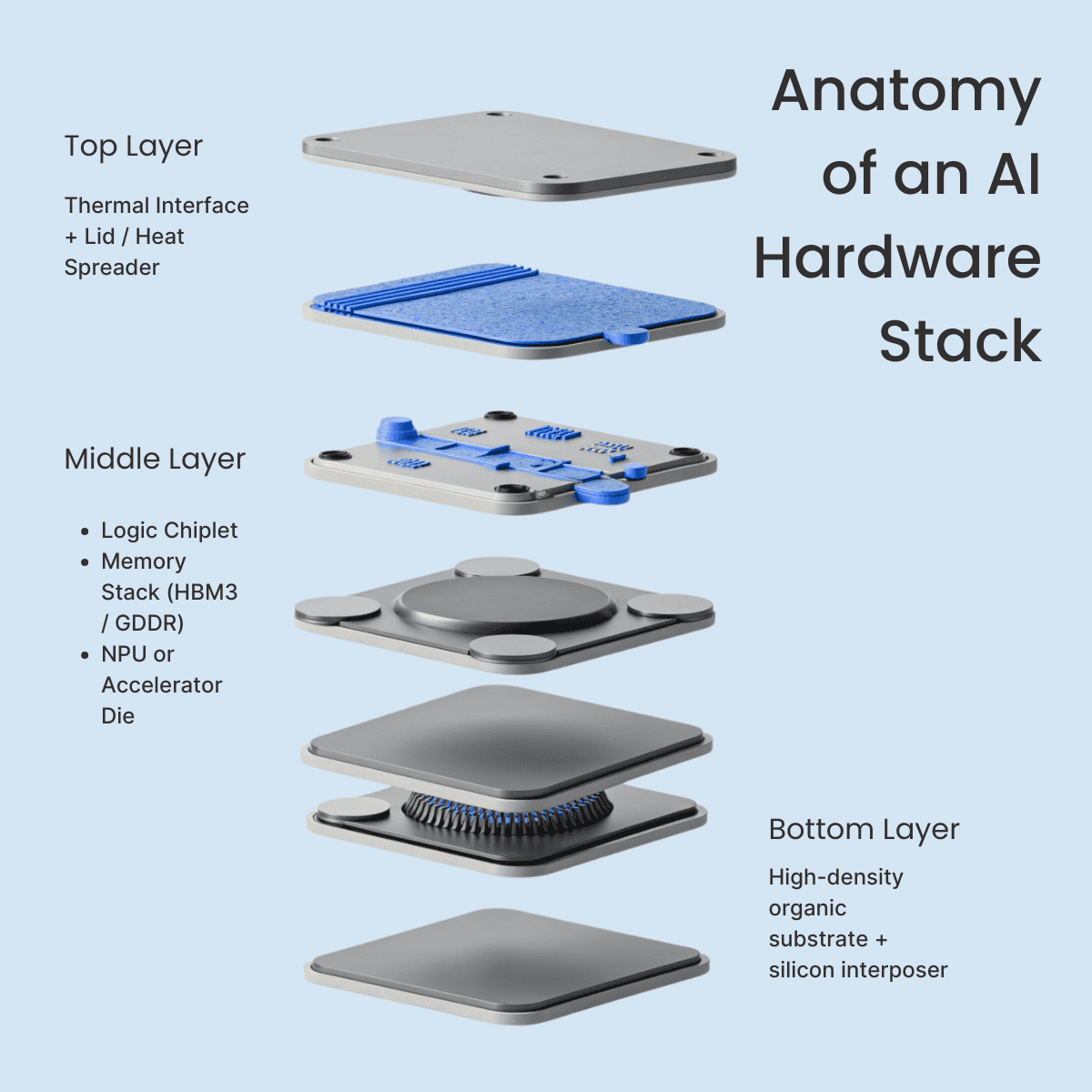

AI-specific processors—particularly those for LLM inference and real-time vision tasks—differ significantly from conventional SoCs.

Key challenges:

- Neural Processing Units (NPUs) with high ops/watt requirements

- HBM3 stacks, requiring ultra-fine pitch bonding and thermal diffusion

- Die-to-die interconnects (e.g., UCIe, NVLink) driving new assembly tolerances

- Power density and thermal design limitations at the substrate level

The Chiplet Approach is Now Standard

To improve yields and accelerate roadmap cycles, major players have moved to chiplet-based architectures.

AMD’s EPYC and Intel’s Meteor Lake have already validated this approach. Nvidia’s H100 uses TSMC’s CoWoS-S packaging to integrate memory and logic dies at high density.

For contract manufacturers and ODMs, chiplets bring three challenges:

- Sub-micron die alignment

- Thermal expansion management during bonding

- Inter-die signal integrity validation

Chiplet architectures solve the scaling problem in silicon. But they hand the complexity over to manufacturing.”

— Credo Semiconductor, Chiplet Summit 2024

Enter Chiplets: Modular Architecture, Complex Assembly

To meet performance targets while staying yield-positive, major players like AMD, Nvidia, and Intel are moving to chiplet-based architectures.

Chiplets allow manufacturers to:

- Use smaller dies (better yields)

- Combine specialised functions (logic, memory, I/O)

- Shorten design cycles

- Improve binning flexibility

But there’s a catch — chiplets are great for design, challenging for production.

What’s required:

- Sub-micron die placement

- 2.5D/3D advanced packaging

- Epoxy control, thermal expansion management

- High-speed interconnect testing before final seal

AI processors don’t just run hot. They run complex — with power, thermals, and packaging pushing manufacturing to its edge.

Smart Manufacturing: From Assembly Line to Engineering Platform

The assembly line isn’t dead — it’s just evolved.

Smart manufacturing now combines:

- Automated optical/X-ray inspection (AOI/AXI)

- Digital twins for inline process control

- Machine learning for predictive QA and failure analysis

- Real-time traceability for compliance and supplier transparency

These aren’t optional anymore. When you’re packaging AI chips with dozens of tiny components in one module, the cost of a single failure point compounds fast — especially when volumes are measured in millions per year (like the UAE–Nvidia deal alone).

Testing Isn’t Final Step. It’s an Ongoing Process.

The old model: build, test, ship.

The new model: test during every stage of build.

- High-density interconnects require multi-stage electrical validation

- Thermals demand IR mapping and active load burn-in

- AI chips with mixed signal components need isolated fault simulation

- Procurement teams need batch-level yield dashboards and traceability reports

Smart factories aren’t just building the hardware. They’re building the quality assurance infrastructure behind it — and doing it fast.

The AI race won’t be won by only the one who trains the biggest model — but by who can build the hardware that runs it, at scale.

From Hype to Hardware Reality: The Procurement View

For sourcing managers and technical buyers, here’s what this shift means:

- Component availability is no longer the only risk — packaging and backend assembly capacity are just as critical

- Regional diversification matters: US and EU subsidies are building domestic capacity, but time-to-volume still favours established players

- Vendor integration is becoming more strategic: Modular assembly, co-design services, and real-time quality feedback loops are now key differentiators

The AI boom isn’t just about who makes the chip — it’s about who can deliver it at scale, on time, and at spec.

AI Hardware is the New Supply Chain Battleground

From silicon design to system-level build, AI hardware is redefining what manufacturers need to deliver. Speed and performance aren’t enough anymore. What matters is modularity, precision, and scalability.

Explore more related content

Strategic Global Sourcing: How Companies Move from Cost Saving to Competitive Advantage

Strategic Global Sourcing: How Companies Move from Cost Saving to Competitive AdvantageSupply chain disruptions now...

Agentic AI in Manufacturing: Why 2026 Is the Year AI Finally Starts Doing the Work

Agentic AI in Manufacturing: Why 2026 Is the Year AI Finally Starts Doing the WorkThe global AI in manufacturing...

Critical Minerals, Critical Thinking: Building Resilient Electronics Supply Chains

Critical Minerals, Critical Thinking: Building Resilient Electronics Supply ChainsThe global economy is expected to...